In my previous two posts (here and here) of this series we identified the different types of data drift and how we can measure and identify where and how much drift is occuring. However, all of this needs to fit into the overall deployment process and that’s where model monitoring comes in. In this post I’ll attempt to provide some guidelines on developing an effective model monitoring plan.

Why do we need model monitoring?

So you develop a model that performs great, put it into production and then what? It’s tempting to move onto the next exciting problem.

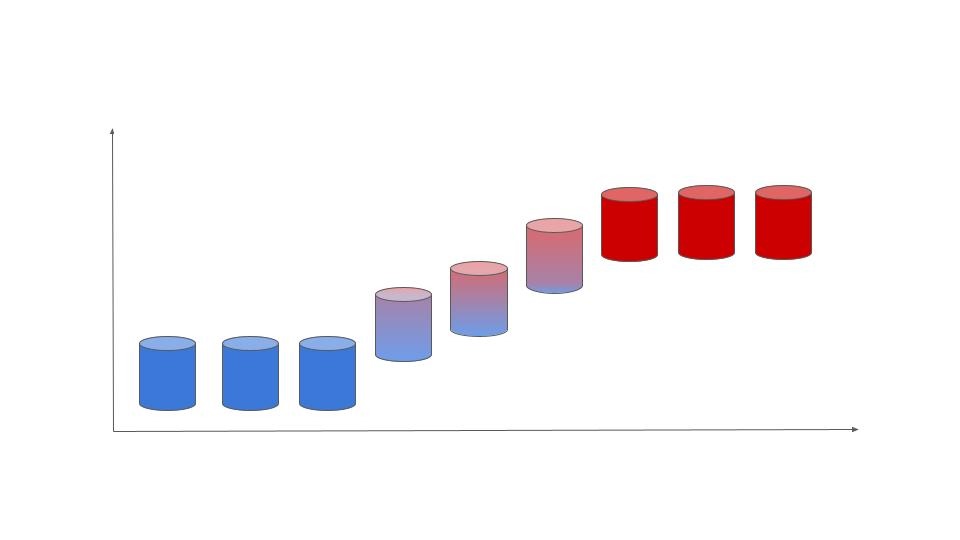

However, once deployed, your model will be working with new, unseen data. Over time, your model performance will start to degrade, not because you built a bad model, but things like changes in the environment it operates in, broken pipelines, feature and data source changes will inevitably cause performance degradation.

Unless you have model monitoring in place, you might not realize that your model’s performance has degraded until you start to see degrading performance on whatever business problem it was trying to solve. Depending on the stakes and risk of what the model was responsible for, the consequences can vary from wasted money on irrelevant recommendations and understocked items to credit decisions and hiring decisions.

In order to get ahead of such situations, we need to have model monitoring in place, so that we can intervene early on and prevent the performance from deteriorating more. To learn more about model recalibration see part 4. Majority of data science/machine learning courses and even our personal interests are usually in the model training phase. But to train a successful model you need to have good data pre-processing, feature selection, hyperparameter tuning and performance evaluation and so those get a lot of attention too. Data scientists are increasingly required to be familiar with the data deployment practices as well. But rarely is the focus on model monitoring, even though it’s the lack of a model monitoring plan that can cause the most damage without you noticing.

What makes a good Model Monitoring Plan

When deploying software, we usually monitor things like uptime, latency and memory utilization. But with machine learning models there’s a lot more to consider. A data scientist might closely follow a model during deployment and soon after as they may need to collect feedback and make sure everything is in order. But soon after they would most likely move on to other projects and the model that got deployed can easily get neglected. So, it’s usually a good idea to make a model monitoring plan during development itself, so that implementing model monitoring does not become an afterthought. So how do we come up with an effective model monitoring plan?

Performance Metrics

During model development you would have decided upon some suitable metrics to evaluate the performance of the model. Depending on the model and its use case, different performance metrics would make the most sense. For example in fraud models we want to have as few false negatives as possible and so overall accuracy doesn’t mean much. Similarly, in some models we are also concerned about the performance of different segments (age, gender, etc). Therefore, it’s important to continue to monitor the same metrics that helped you decide whether the model is successful in the first place.

Feature Distributions

Similar to initial EDA (Exploratory Data Analysis) we can monitor attributes of the most important features to see how they are changing.

-

Outliers / Range of possible values

By observing the range of possible values in the training dataset and the range of possible values based on domain knowledge, we can be alerted if there are new values coming in that are outside the expected bounds. This could be happening because the data input for the feature has truly changed or it might be an error at the data source/data processing.

-

Number of Nulls

Depending on the feature, we might expect there to be a certain average number of null values present. However, if the number of null values start to increase over time, we may have a problem. This becomes a bigger issue if the feature with increased null values is one of the model’s top features. By monitoring for this, we can catch this incrase and intervene early on.

-

Histograms

By taking snapshots of histograms, we can observe the change in the data distribution over time.

Thresholds

The main point of coming up with a monitoring plan and implementing it is so that we can catch model degradation early on. In order to do this, we want to have different thresholds in place. The thresholds are placed on to monitor the model performance, but can also be expanded to monitor things like feature drift. A good practice is to usually have a “Warning” threshold and a “Critical” threshold. When the “Warning” threshold is crossed, it doesn’t mean that the model needs to be replaced/recalibrated immediately but you can start to investigate. It might just be a temporary situation or something more serious, either way you have time to investigate and react. The “Critical” threshold can be set to the absolute minimum the model should be performing at. When crossed, something is seriously wrong and immediate escalation needs to take place. The model may even be taken out of production or human intervention put in place. These are just examples and not hard rules. The actual arrangement depends on the model, risk level and business.

Business KPIs

Most models are attempting to solve or improve a business problem, which means its effectiveness can be seen in the form of a business metric. Usually by the time we see a dip in KPIs, that would usually mean that the model’s performance has already degraded, for example, if a model that predicts customer churn doesn’t perform well, we will notice a drop in quarterly subscription renewals. Therefore it is important to monitor the KPIs as well to make sure everything is in order and to adjust our monitoring plan accordingly if we notice any issues.

Monitoring Frequency

Now that we’ve decided our performance metrics and set suitable thresholds, we have to decide how often the monitoring and reviews need to take place. This depends on the criticality of the model output, how easily the data/trends can change and the resources available. Some models can be checked annually to see whether the thresholds have been breached and action taken accordingly. For more critical outputs frequent monitoring can be set up.

Why not set-up real time monitoring for all models?

The most important models may have their own real time monitoring and dashboards that display the status of a model. However, depending on the company technical resources might not be available to have constant dedicated monitoring set up. With real time monitoring, maintenance also becomes more of an issue than with annual/quarterly monitoring through reports. Any changes to thresholds, changes on who needs to be notified, etc need to be immediately updated, and with a large number of models, the chances of the configurations not being up to date may increase.

Having said that, there definitely are use cases where the model needs to be updated much more frequently. In this series of articles I’m focussing on models that are monitored over longer periods of time (quarterly, yearly, and maybe monthly but not more frequent).

Stakeholders

With machine learning deployments there’s usually multiple stakeholders. Some would be concerned with the data and the model itself (data scientists, data engineer, data science manager) and others with the outcome of the model (Model user, business stakeholders). Some industries such as healthcare, insurance or finance will also have compliance teams in place to check on the fairness of the model. When the model is found to be underperforming, depending on the situation the appropriate stakeholder(s) must be contacted. It’s always to have this protocol decided beforehand than to scramble and figure it out after a problem arises.

Recalibration Plan

Once the model is identified to be constantly underperforming, the relevant stakeholders would have to decide whether what actions to take. This would usually involve retraining the model (model recalibration) or creating a new model.

In Conclusion

Model monitoring might not be as fun as developing a model, but it is a vital part of a successful model deployment. Having a good model monitoring plan and setting it up before deployment can save everyone a lot of headaches down the road.

Python Code Examples for Detecting Data Drift

Python Code Examples for Detecting Data Drift